By Dimi Sandu, Head of Solutions Engineering (EMEA)

A question often asked by people purchasing CCTV is, “How far can this camera see?” Similar to the human eye, the cameras can see as far as the horizon (in an open space), but the further away certain objects are, the harder it is to clearly distinguish their details. This is where the concept of Pixels Per Foot (PPF) comes into play, as it is a way to quantify the quality of the recorded footage and the people or objects contained in it.

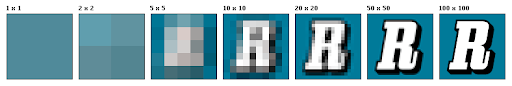

In a digital image, the pixel is the smallest element, with millions of pixels being arranged in rows in a matrix grid (e.g., a 5 MP camera contains 5 million pixels in total). There is a direct correlation between the number of pixels in an image and the overall quality of the picture. For example, a 12 MP picture will be far clearer and more detailed than a 5 MP one. The diagram below shows the clarity of a character displayed at different resolutions, from 1 pixel to 10,000 (100x100):

Within a picture, different people or objects will be displayed with different levels of clarity, depending on how close or far they are from the camera. This is because, at each point, the number of pixels covering them is different. There is an inverse relationship between the PPF count and distance, as people or objects further away will have a decreased PPF, resulting in a loss of clarity.

Use PPF when doing surveys

Here are a few rules when it comes to site walks and scoping the required number of cameras. First, when it comes to a system operator, industry best practices recommend that the surveyed objects be in the 50+ PPF range, even if people will naturally have different opinions of image quality. The reason is that going under 50 PPF will make faces less easily distinguishable.

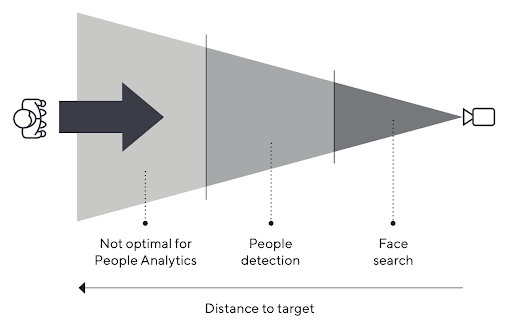

Secondly, when it comes to analytics (e.g., people detection, face search), the cameras expect a certain level of clarity, with people or objects not in this range not showing up in searches or not being analyzed.

In the image below, as the person walks towards the camera, their distance lowers and PPF increases, triggering the onboard algorithms to pick them up and further analyze them.

To increase a camera’s detection and analysis distance, one can either:

- deploy a higher resolution camera (resulting automatically in more pixel density, and objects at the same distance having a higher PPF on higher resolution models), or

- utilize optical zoom (gaining clarity at distance at the expense of the field of view, with higher levels of zoom resulting in a decreased viewing angle)

In the below example, a camera is looking at the same subject when they are 25 feet (7.5 meters) and 150 feet (45 meters) away. In the second image, it is impossible to understand who the person is, and, with bad light conditions, it is hard to even see that someone is there to start with.

Zooming in optically allows us to recapture that lost quality, at the expense of the field of view (the image is cropped):

An example using Verkada cameras

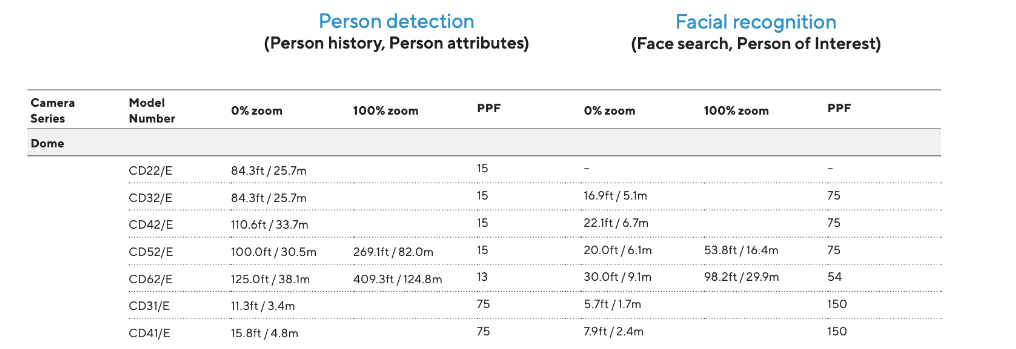

At the bottom of the People Analytics Guide, found here, one can find a list of each camera model and the distance where the analytics will stop working. Varifocal models (with optical zoom capabilities) are listed in both fully zoomed-in and fully zoomed-out scenarios.

As seen in the diagram below, the same 5MP CD52 camera will pick people up at 30 meters when zoomed out, increasing to 82 meters when fully zoomed in. As we are using detailed analysis of face features (such as length and width), Face Search and Person of Interest will require the person to be much closer to the camera (higher PPF).

As an example, imagine a scenario where a camera is pointing at a door 8 meters away, and the requirement is to implement Face Search and Person of Interest for everyone going through that door. One could either deploy a 5MP CD52 and zoom it in, or an 8MP CD62 and leave it fully zoomed out. The higher pixel density of the latter model will automatically result in people coming in being recorded at a higher PPF, in range for the advanced analytics to operate.

Frequently Asked Questions

What is PPF in video surveillance?

PPF stands for Pixels Per Foot. It is a way to quantify the quality and clarity of the video footage based on the number of pixels per foot that objects in the frame take up. The higher the PPF, the more detailed the image.

Why is PPF important for video surveillance?

Higher PPF results in clearer video footage. This allows security personnel to more easily identify individuals and objects in the video. A higher PPF may be required for facial recognition, license plate recognition, and other video analytics.

What is a good PPF for face recognition?

Industry best practices recommend at least 50 PPF for recognizable facial images.

How can I increase PPF?

Use higher-resolution cameras and/or optical zoom. Higher resolution increases pixel density, while optical zoom magnifies objects to take up more pixels.

How does PPF change with distance from the camera?

PPF decreases as distance increases, since objects take up fewer pixels the further away they are.

Should I use PPF or field of view when selecting cameras and placement?

Use both. Understand the required PPF for video analytics, then select the widest field of view camera that can achieve this at relevant distances.

Is a higher PPF always better?

Not necessarily. A higher PPF requires more storage and bandwidth. Optimize PPF for the required use case rather than maximizing it everywhere.